A demonstration of "inspection" drones that use advanced AI, August 2, 2022. (Credit: Evgen Kotenko/Ukrinform/Abaca/Sipa USA(Sipa via AP Images)

At a time of deepening competition over artificial intelligence (AI) and the microchips, data centers, and critical minerals that power it, the world experienced a rare glimmer of multilateral cooperation last month. On March 21, 2024, the United Nations (UN) General Assembly adopted an unprecedented resolution to promote safe, secure, and trustworthy AI. Backed by the United States (US) and China, and co-sponsored by 120 other states, the resolution underlined the importance of respecting and promoting human rights in the design, development, and deployment of AI. While it is not yet clear whether the resolution will tangibly minimize the risks posed by advanced “frontier” AI models, UN member states appear to be waking up to the need to govern AI before AI governs all of us.

The latest global push to regulate AI comes at a time of growing concern and awareness over its potential to do harm. A wide range of company executives, AI industry leaders, and AI experts fear that the technology could generate extinction-level threats, including weaponization and loss of control. There are real concerns over how AI could automate between 20 and 60 percent of all jobs in upper-, middle-, and lower-income countries alike. By one estimate, as many as 300 million jobs could be vulnerable to automation by 2030. There are also concerns with how generative AI can super-charge disinformation, misinformation, and malinformation, including by amplifying the spread of synthetic content (better known as “deep fakes”).

Not surprisingly, countries are taking different approaches to regulating AI. Acutely conscious of the tremendous risks posed by the technology to regime stability, China was an early mover, and in 2021 issued restrictive rules around generative AI, deep fakes, recommendation algorithms, and fake news. Today, no company can produce advanced AI in China without government approvals. The US began to take action in October 2023 only after the White House issued an Executive Order to clamp-down on frontier models and authenticate synthetic content. A dozen states have also issued specific AI rules, which are starting to take effect. One concern is how to ensure these rules reduce risk without stifling innovation.

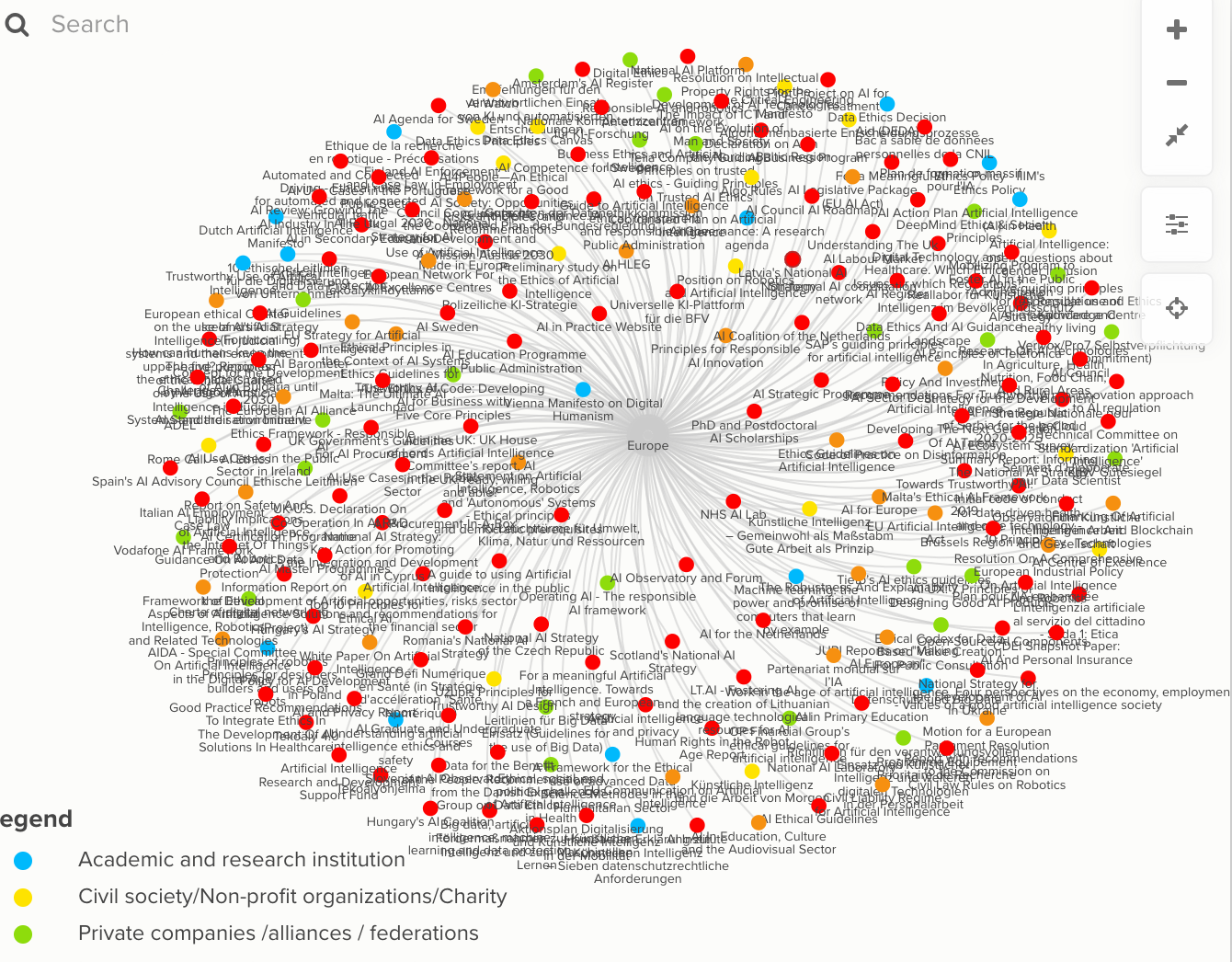

A screen grab showing part of a data visualization by the Igarapé Institute created from over 470 AI policies and recommendations by governments, private sector actors, and civil society actors across 60 countries.

The European Union (EU) is the most advanced when it comes to promoting and enforcing safe and ethical AI. On March 13, 2024, the EU passed the AI Act—the first ever legal framework on AI. In addition to establishing an AI governance structure, it prohibits AI practices that feature unacceptable risks; sets clear requirements for high-risk applications; and defines the obligations designers and deployers of AI have. The act reflects a deep understanding of how AI is trained, the multiple settings in which it is used, the range of potential risks, and how it should be treated in different application environments. While not perfect, it is a testament to incremental, deliberate, and technocratic engagement.

Facing a rash of AI regulation on the horizon, tech companies are also scrambling to create voluntary rules around AI safety and security. Dominant players like Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI signed voluntary principles on AI safety, security, and trust with the US White House in 2023. These same firms, together with Adobe, IBM, TikTok and others, agreed to an AI Elections Accord in February 2024 to better moderate AI-enabled deep fakes. A growing number of tech companies are also tagging and watermarking AI-generated content. While welcome steps, there is still growing alarm about how power is concentrated within a few companies and individuals. Not surprisingly, the US-based Federal Trade Commission, as well as authorities in the EU and the United Kingdom, have all recently opened up investigations into unfair competition practices.

While recent measures in China, the US, and EU have captured global headlines, countries and companies around the world are establishing voluntary rules and standards to promote AI safety and security. New research from the Igarape Institute and New America has identified over 470 sets of AI rules, standards, and principles across more than 60 countries between 2011 and 2024. Over two thirds of these agreements were issued by governments, intergovernmental bodies, and private companies, with the rest launched by digital rights groups, and academic associations, and AI researchers. Most of them underline the importance of human-centered design and control; fostering digital inclusion; protecting human rights, safety and security; and transparency and accountability.

But while AI regulation is spreading internationally, it is unevenly distributed. The Igarapé Institute and New America found that 63 percent of the identified principles were created in Europe, the US, and China. Just two percent of them were developed in African countries, five percent in Latin America and the Caribbean, and 19 percent in the rest of Asia. A clear AI divide is emerging in terms of capability and regulation—one that reflects structural dynamics between comparatively wealthy and poor countries. Put simply, most AI policy and standards are being developed and applied in the US, EU and China. This is hardly surprising, since this is where most of the development, capital, and talent is concentrated; there are much lower rates of investment in and adoption of AI in poorer parts of the world. This means there is a risk that the regimes being developed do not account for the political, social, economic and cultural variations in the Global South.

Nevertheless, with AI awareness higher than it’s ever been, a new era of AI regulation is underway. While the UN is demonstrating that global agreements are possible in an era of deepening geopolitical competition, the vast majority of action is happening at the regional, national and subnational scale. To be sure, more global regulation is essential, including a potential international agency along the lines of the International Atomic Energy Agency or the Intergovernmental Panel on Climate Change. Meanwhile, multi-stakeholder engagement is crucial, especially when it involves governments, technology companies, and digital rights groups. Governments would be advised to create national authorities on AI, including dedicated cabinet-level executives, as the United Arab Emirates and others are doing. Given the pace and scale of AI development, national authorities will need to be more agile and flexible than ever. This is particularly important as the world moves from voluntary to enforced standards for ethical and safe AI.

Robert Muggah is the co-founder of the Igarapé Institute.